In recent years, the media have been paying increasing attention to adversarial examples, input data such as images and audio that have been modified to manipulate the behavior of machine learning algorithms. Stickers pasted on stop signs that cause computer vision systems to mistake them for speed limits; glasses that fool facial recognition systems, turtles that get classified as rifles — these are just some of the many adversarial examples that have made the headlines in the past few years.

There’s increasing concern about the cybersecurity implications of adversarial examples, especially as machine learning systems continue to become an important component of many applications we use. AI researchers and security experts are engaging in various efforts to educate the public about adversarial attacks and create more robust machine learning systems.

Among these efforts is adversarial.js, an interactive tool that shows how adversarial attacks work. Released on GitHub last week, adversarial.js was developed by Kenny Song, a graduate student at the University of Tokyo doing research on the security of machine learning systems. Song hopes to demystify adversarial attacks and raise awareness about machine learning security through the project.

Crafting your own adversarial examples

Song has designed adversarial.js with simplicity in mind. It is written in Tensorflow.js, the JavaScript version of Google’s famous deep learning framework.

“I wanted to make a lightweight demo that can run on a static webpage. Since everything is loaded as JavaScript on the page, it’s really easy for users to inspect the code and tinker around directly in the browser,” Song told TechTalks.

[Read: ]

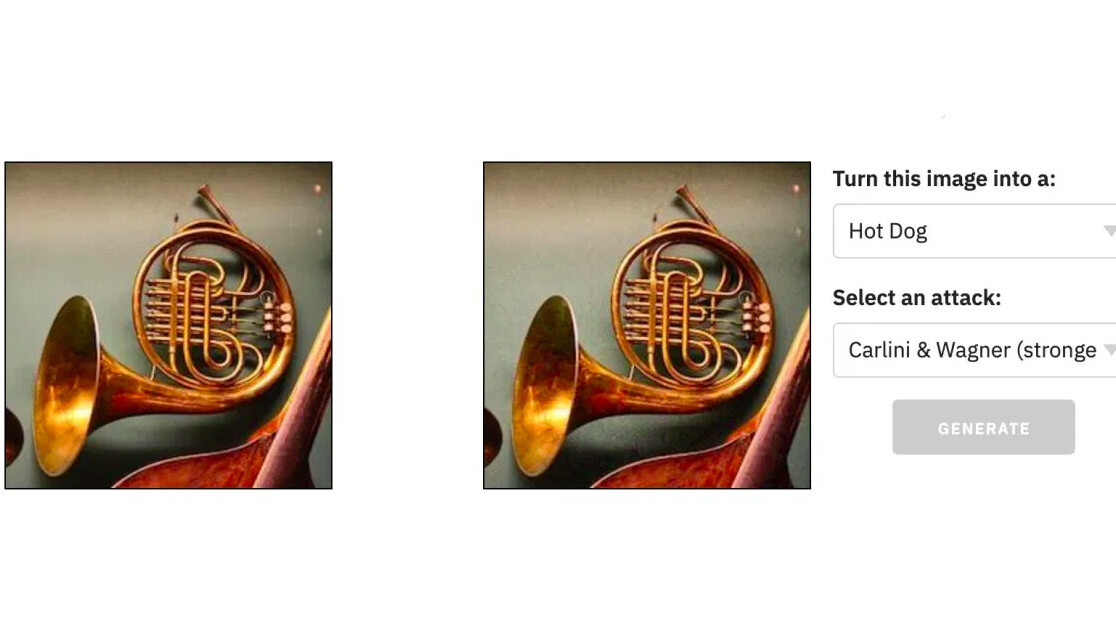

Song has also launched a demo website that hosts adversarial.js. To craft your own adversarial attack, you choose a target deep learning model and a sample image. You can run the image through the neural network to see how it classifies it before applying the adversarial modifications.

As adversarial.js displays, a well-trained machine learning model can predict the correct label of an image with very high accuracy.

The next phase is to create the adversarial example. The goal here is to modify the image in a way that it doesn’t change to a human observer but causes the targeted machine learning model to change its output.

After you choose a target label and an attack technique and click “Generate,” adversarial.js creates a new version of the image that is slightly modified. Depending on the technique you use, the modifications might be more or less visible to the naked eye. But to the target deep learning model, the difference is tremendous.

In our case, we are trying to fool the neural network into thinking our stop sign is a 120km/hr speed limit sign. Hypothetically, this would mean that a human driver would still stop when seeing the sign, but a self-driving car that uses neural networks to make sense of the world would dangerously speed past it.

Adversarial attacks are not an exact science, and this is one of the things that adversarial.js displays very well. If you tinker with the tool a bit, you’ll see that in many cases, the adversarial techniques do not work consistently. In some cases, the perturbation does not result in the machine learning model changing its output to the desired class but instead causes it to lower its confidence in the main label.

Understanding the threat of adversarial attacks

“I got interested in adversarial examples because they break our fantasy that neural networks have human-level perceptual intelligence,” Song says. “Beyond the immediate problems it brings, I think understanding this failure mode can help us develop more intelligent systems in the future.”

Today, you can run machine learning models in applications running on your computer, phone, home security camera, smart fridge, smart speaker, and many other devices.

Adversarial vulnerabilities make these machine learning systems unstable in different environments. But they can also create security risks that we have yet to understand and deal with.

“Today, there’s a good analogy to the early days of the internet,” Song says. “In the early days, people just focused on building cool applications with new technology, and assumed everyone else had good intentions. We’re in that phase of machine learning now.”

Song warns that bad actors will find ways to take advantage of vulnerable machine learning systems that were designed for a “best-case, I.I.D. [independent and identically distributed], non-adversarial world.” And few people understand the risk landscape, partially due to the knowledge being locked in research literature. In fact, adversarial machine learning has been discussed among AI scientists since the early 2000s and there are already thousands of papers and technical articles on the topic.

“Hopefully, this project can get people thinking about these risks, and motivate investing resources to address them,” Song says.

The machine learning security landscape

“Adversarial examples are just one problem,” Song says, adding that there are more attack vectors like data poisoning, model backdooring, data reconstruction, or model extraction.

There’s growing momentum across different sectors to create tools for improving the security of machine learning systems. In October, researchers from 13 organizations, including Microsoft, IBM, Nvidia, MITRE, released the Adversarial ML Threat Matrix, a framework to help developers and adopters of machine learning technology to identify possible vulnerabilities in their AI systems and patch them before malicious actors exploit them. IBM’s research unit is separately involved in a lot of research on creating AI systems that are robust to adversarial perturbations. And the National Institute of Standards and Technology, the research arm of the U.S. Department of Commerce, has launched TrojAI, an initiative to combat trojan attacks on machine learning systems.

And independent researchers such as Song will continue to make their contributions to this evolving field. “I think there’s an important role for next-gen cybersecurity companies to define best practices in this space,” Song says.

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.