So you’re interested in AI? Then join our online event, TNW2020, where you’ll hear how artificial intelligence is transforming industries and businesses.

Amid the continuing pandemic news and protests against systemic racism in our society, another piece of news has come to the fore: Some of the biggest tech companies announced they would ban law enforcement from using their facial recognition technology. Amazon, IBM, and Microsoft made the move as protesters around the country call for an end to racial profiling and police brutality.

Since its inception, facial recognition technology has been met with equal doses of skepticism and controversy. One company scraped billions of photos from social media without the public’s knowledge, building a near-universal facial recognition application and inciting cries of infringement on constitutional freedom and exacerbation of racial biases.

[Read: We asked 3 CEOs what tech trends will dominate post-COVID]

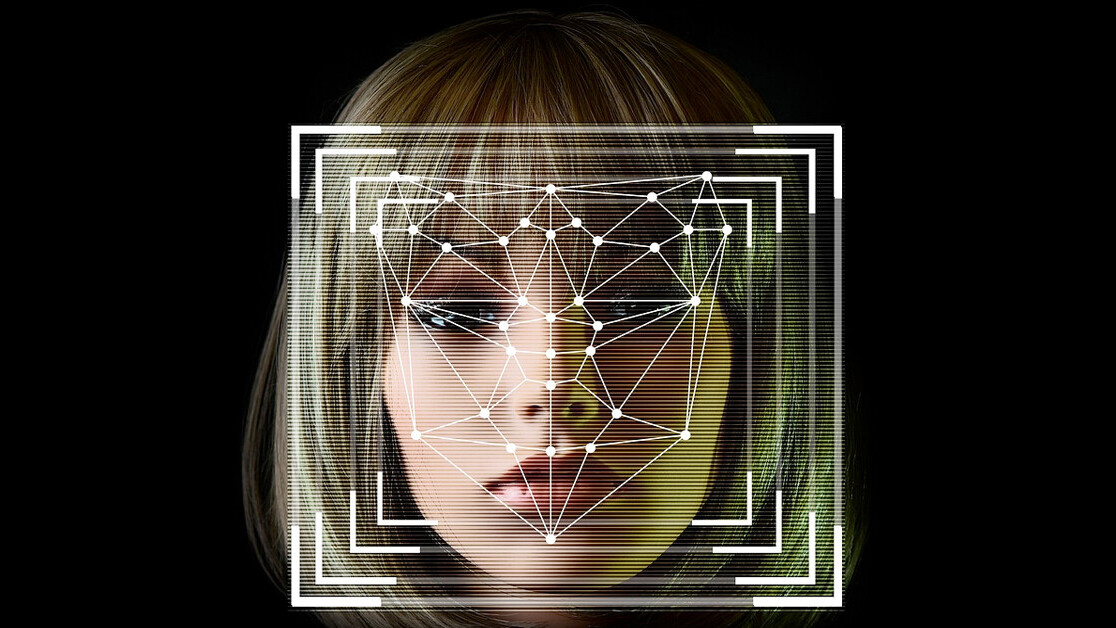

Typically, facial recognition technology captures, analyzes, and stores facial scans to identify the actual identity of a person. It then compares the information gleaned from the scan with a database of known faces to find a match. While the social aspects of unchecked facial recognition are concerning, the security (or lack thereof) mechanisms to keep hackers out of the servers that house the databases as well as the growing number of inaccurate matches (disproportionately impacting minority populations) are just as troubling.

These are very real issues that merit debate in industry, government, and community settings so we can figure out ways to use this type of technology without violating human rights. But the proverbial baby that shouldn’t get thrown out with the bathwater is a related, but fundamentally different technology: facial authentication.

Before we go deeper, it’s important to note that there are two approaches to facial — or any biometric — authentication: “match on server” and “match on device.” The former approach shares some of the risky aspects of facial recognition technology because it stores the details of one’s most personal features–your face or your fingerprint–on a server, which is inherently insecure. There are some well-publicized examples of biometric databases being hacked, which is why so many companies are committing to only do on-device biometrics.

Using “match on device” authentication, the facial scan compares the current face with that of the one already stored on the device; It never searches the cloud for a match or leaves the device at all. The scan simply confirms the person requesting access to, say, a laptop, a smartphone, or a particular website is who they claim to be. This approach uses a one-to-one comparison and specifically allows a user to access to a machine, a website, or an application instead of taking on the risk and challenge of using a password.

Apple’s FaceID, which is arguably the most well-known of facial authentication applications, encrypts the data on a chip on the user’s device—as do Google Android biometrics and Windows 10 PCs that leverage cameras (or fingerprint scanners) for Windows Hello. This means that, even if these devices are stolen or lost, the biometric scan remains secure from bad actors.

A common thread across these devices is that they all have support for industry-backed FIDO standards that leading service providers have developed in collaboration with leading technology vendors, and will be leveraging to provide simpler and safer login experiences instead of depending on passwords, which are susceptible to theft or hacking.

All of this is to say that facial authentication is not only different than facial recognition, but it’s also the easiest and most secure method to log into your device—and soon to log into web sites as well.

This article was originally published by Andrew Shikiar on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.