One of the things that caught my eye at Nvidia’s flagship event, the GPU Technology Conference (GTC), was Maxine, a platform that leverages artificial intelligence to improve the quality and experience of video-conferencing applications in real-time.

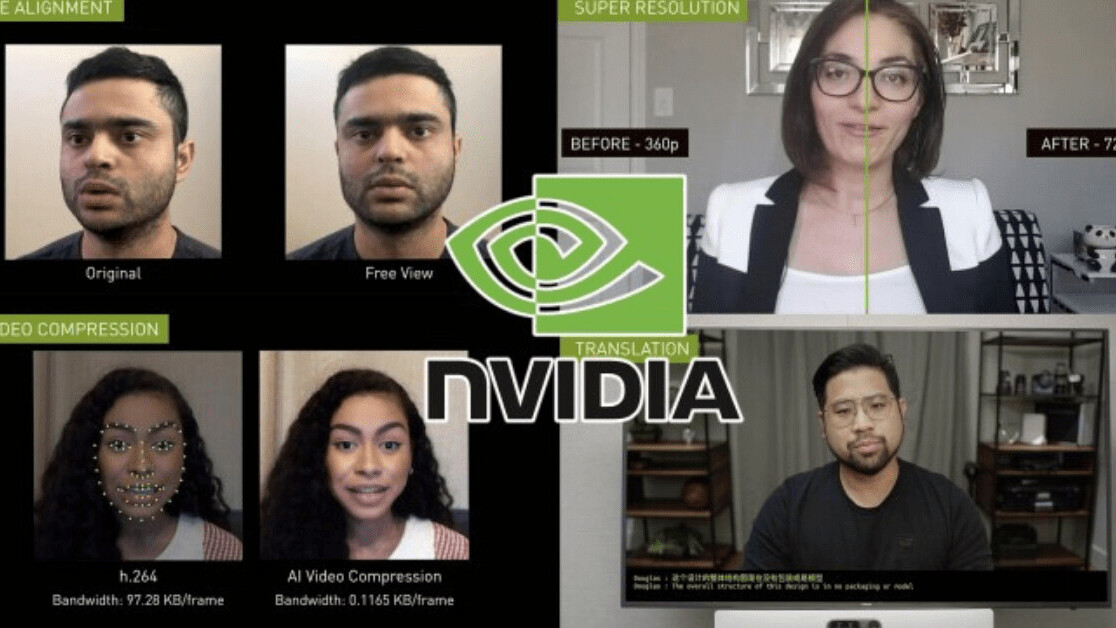

Maxine used deep learning for resolution improvement, background noise reduction, video compression, face alignment, and real-time translation and transcription.

In this post, which marks the first installation of our “deconstructing artificial intelligence” series, we will take a look at how some of these features work and how they tie-in with AI research done at Nvidia. We’ll also explore the pending issues and the possible business model for Nvidia’s AI-powered video-conferencing platform.

Super-resolution with neural networks

The first feature shown in the Maxine presentation is “super resolution,” which according to Nvidia, “can convert lower resolutions to higher resolution videos in real time.” Super resolution enables video-conference callers to send lo-res video streams and have them upscaled at the server. This reduces the bandwidth requirement of video conference applications and can make their performance more stable in areas where network connectivity is not very stable.

[Read:

The big challenge of upscaling visual data is filling in the missing information. You have a limited array of pixels that represent an image, and you want to expand it to a larger canvas that contains many more pixels. How do you decide what color values those new pixels get?

Old upscaling techniques use different interpolation methods (bicubic, lanczos, etc.) to fill the space between pixels. These techniques are too general and might provide mixed results in different types of images and backgrounds.

One of the benefits of machine learning algorithms is that they can be tuned to perform very specific tasks. For instance, a deep neural network can be trained on scaled-down video frames grabbed from video conference streams and their corresponding hi-res original images. With enough examples, the neural network will tune its parameters to the general features found in video-conference visual data (mostly faces) and will be able to provide a better low- to hi-res conversion than general-purpose upscaling algorithms. In general, the more narrow the domain, the better the chances of the neural network to converging on a very high accuracy performance.

There’s already a solid body of research on using artificial neural networks for upscaling visual data, including a 2017 Nvidia paper that discusses general super resolution with deep neural networks. With video-conferencing being a very specialized case, a well-trained neural network is bound to perform even better than more general tasks. Aside from video conferencing, there are applications for this technology in other areas, such as the film industry, which uses deep learning to remaster old videos to higher quality.

Video compression with neural networks

One of the more interesting parts of the Maxine presentation was the AI video compression feature. The video posted on Nvidia’s YouTube shows that using neural networks to compress video streams reduces bandwidth from ~97 KB/frame to ~0.12 KB/frame, which is a bit exaggerated, as users have pointed out on Reddit. Nvidia’s website states developers can reduce bandwidth use down to “one-tenth of the bandwidth needed for the H.264 video compression standard,” which is a much more reasonable—and still impressive—figure.

How does Nvidia’s AI achieve such impressive compression rates? A blog post on Nvidia’s website provides more detail on how the technology works. A neural network extracts and encodes the locations of key facial features of the user for each frame, which is much more efficient than compressing pixel and color data. The encoded data is then passed on to a generative adversarial network along with a reference video frame captured at the beginning of the session. The GAN is trained to reconstruct the new image by projecting the facial features onto the reference frame.

The work builds up on previous GAN research done at Nvidia, which mapped rough sketches to rich, detailed images and drawings.

The AI video compression shows once again how narrow domains provide excellent settings for the use of deep learning algorithms.

Face realignment with deep learning

The face alignment feature readjusts the angle of users’ faces to make it appear as if they’re looking directly at the camera. This is a problem that is very common in video conferencing because people tend to look at the faces of others on the screen rather than gaze at the camera.

Although there isn’t much detail about how this works, the blog post mentions that they use GANs. It’s not hard to see how this feature can be bundled with the AI compression/decompression technology. Nvidia has already done extensive research on landmark detection and encoding, including the extraction of facial features and gaze direction at different angles. The encodings can be fed to the same GAN that projects the facial features onto the reference image and let it do the rest.

Where does Maxine run its deep learning models?

There are a lot of other neat features in Maxine, including the integration with JARVIS, Nvidia’s conversational AI platform. Getting into all of that would be beyond the scope of this article.

But some technical issues remain to be resolved. For instance, one issue is how much of Maxine’s functionalities will run on cloud servers and how much of it on user devices. In response to a query from TechTalks, a spokesperson for Nvidia said, “NVIDIA Maxine is designed to execute the AI features in the cloud so that every user access them, regardless of the device they’re using.”

This makes sense for some of the features such as super resolution, virtual background, auto-frame, and noise reduction. But it seems pointless for others. Take, for example, the AI video compression example. Ideally, the neural network doing the facial expression encoding must run on the sender’s device, and the GAN that reconstruct the video frame must run on the receiver’s device. If all these functions are being carried out on servers, there would be no bandwidth savings, because users would send and receive full frames instead of the much lighter facial expression encodings.

Ideally, there should be some sort of configuration that allows users to choose the right balance between local and on-cloud AI inference to strike the right balance between network and compute availabilities. For instance, a user who has a workstation with a strong GPU card might want to run all deep learning models on their computer in exchange for lower bandwidth usage or cost savings. On the other hand, a user joining a conference from a mobile device with low processing power would forgo the local AI compression and defer virtual background and noise reduction to the Maxine server.

What is Maxine’s business model?

With the covid-19 pandemic pushing companies to implement remote-working protocols, it seems as good a time as any to market video-conferencing apps. And with AI still being in the climax of its hype season, companies have a tendency to rebrand their products as “AI-powered” to improve sales. So, I’m generally a bit skeptical about anything that has “video conferencing” and “AI” in its name these days, and I think many of them will not live up to the promise.

But I have a few reasons to believe Nvidia’s Maxine will succeed where others fail. First, Nvidia has a track record of doing reliable deep learning research, especially in computer vision and more recently in natural language processing. The company also has the infrastructure and financial means to continue to develop and improve its AI models and make them available to its customers. Nvidia’s GPU servers and its partnerships with cloud providers will enable it to scale as its customer base grows. And its recent acquisition of mobile chipmaker ARM will put it in a suitable position to move some of these AI capabilities to the edge (maybe a Maxine-powered video-conferencing camera in the future?).

Finally, Maxine is an ideal example of narrow AI being put to good use. As opposed to computer vision applications that try to address a wide range of issues, all of Maxine’s features are tailored for a special setting: a person talking to a camera. As various experiments have shown, even the most advanced deep learning algorithms lose their accuracy and stability as their problem domain expands. Reciprocally, neural networks are more likely to capture the real data distribution as its problem domain becomes narrower.

But as we’ve seen on these pages before, there’s a huge difference between an interesting piece of technology that works and one that has a successful business model.

Maxine is currently in early access mode, so a lot of things might change in the future. For the moment, Nvidia plans to make it available as an SDK and a set of APIs hosed on Nvidia’s servers that developers can integrate into their video-conferencing applications. Corporate video conferencing already has two big players, Teams and Zoom. Teams already has plenty of AI-powered features and it wouldn’t be hard for Microsoft to add some of the functionalities Maxine offers.

What will be the final pricing model for Maxine? Will the benefits provided by the bandwidth savings be enough to justify those costs? Will there be incentives for large players such as Zoom and Microsoft teams to partner with Nvidia, or will they add their own versions of the same features? Will Nvidia continue with the SDK/API model or develop its own standalone video-conferencing platform? Nvidia will have to answer these and many other questions as developers explore its new AI-powered video-conferencing platform.

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.