A multi-disciplinary team of researchers from Technische Universität Berlin recently created a neural ‘network’ that could one day surpass human brain power with a single neuron.

Our brains have approximately 86 billion neurons. Combined, they make up one of the most advanced organic neural networks known to exist.

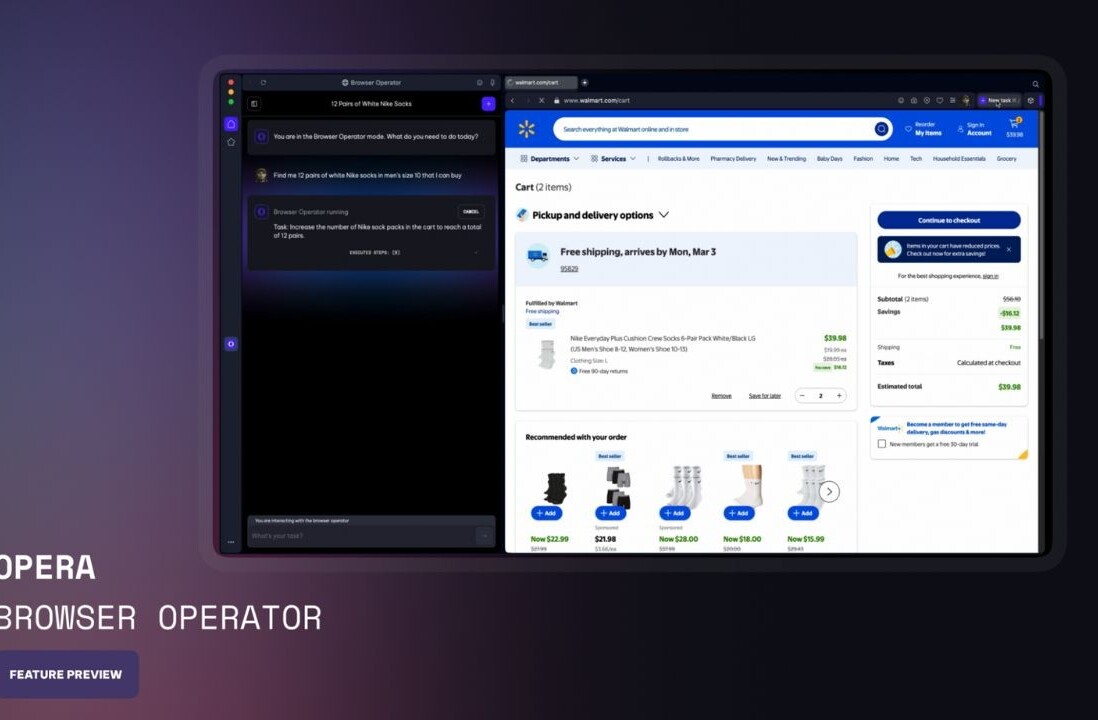

Current state-of-the-art artificial intelligence systems attempt to emulate the human brain through the creation of multi-layered neural networks designed to cram as many neurons in as little space as possible.

Unfortunately, such designs require massive amounts of power and produce outputs that pale in comparison to the robust, energy-efficient human brain.

Per an article from The Register’s Katyanna Quach, scientists estimate the costs for training just one neural “super network” to exceed that of a nearby space mission:

Neural networks, and the amount of hardware needed to train them using huge data sets, are growing in size. Take GPT-3 as an example: it has 175 billion parameters, 100 times more than its predecessor GPT-2.

Bigger may be better when it comes to performance, yet at what cost does this come to the planet? Carbontracker reckons training GPT-3 just once requires the same amount of power used by 126 homes in Denmark per year, or driving to the Moon and back.

The Berlin team decided to challenge the idea that bigger is better by building a neural network that uses a single neuron.

Typically, a network needs more than one node. In this case however, the single neuron is able to network with itself by spreading out over time instead of space.

Per the team’s research paper:

We have designed a method for complete folding-in-time of a multilayer feed-forward DNN. This Fit-DNN approach requires only a single neuron with feedback-modulated delay loops. Via a temporal sequentialization of the nonlinear operations, an arbitrarily deep or wide DNN can be realized.

In a traditional neural network, such as GPT-3, each neuron can be weighted in order to fine-tune results. The result, typically, is that more neurons produce more parameters, and more parameters produce finer results.

But the Berlin team figured out that they could perform a similar function by weighting the same neuron differently over time instead of spreading differently-weighted neurons over space.

Per a press release from Technische Universität Berlin:

This would be akin to a single guest simulating the conversation at a large dinner table by switching seats rapidly and speaking each part.

“Rapidly” is putting it mildly though. The team says their system can theoretically reach speeds approaching the universe’s limit by instigating time-based feedback loops in the neuron via lasers — neural networking at or near the speed of light.

What does this mean for AI? According to the researchers, this could counter the rising energy costs of training strong networks. Eventually we’re going to run out of feasible energy to use if we continue to double or triple usage requirements with bigger networks over time.

But the real question is whether a single neuron stuck in a time loop can produce the same results as billions.

In initial testing, the researchers used the new system to perform computer vision functions. It was able to remove manually-added noise from pictures of clothing in order to produce an accurate image — something that’s considered fairly advanced for modern AI.

With further development, the scientists believe the system could be expanded to create “a limitless number” of neuronal connections from neurons suspended in time.

It’s feasible such a system could surpass the human brain and become the world’s most powerful neural network, something AI experts refer to as a “superintelligence.”

Get the TNW newsletter

Get the most important tech news in your inbox each week.