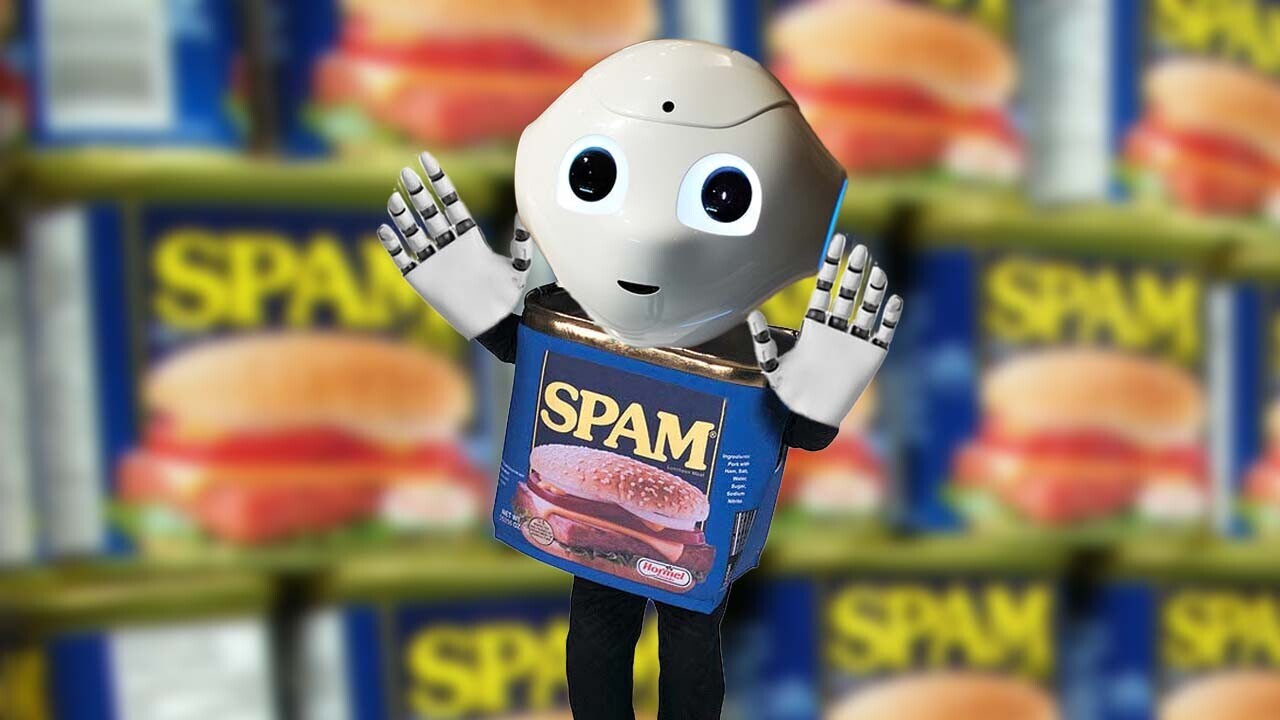

All the publishers and editors out there thinking of replacing their journalists with AI might want to pump their brakes. Everybody’s boss, the Google algorithm, classifies AI-generated content as spam.

John Mueller, Google’s SEO authority, laid the issue to rest while speaking at a recent “Google Search Central SEO office-hours hangout.”

Per a report from Search Engine Journal’s Matt Southern, Mueller says GPT-3 and other content generators are not considered quality content, no matter how convincingly human they are:

These would, essentially, still fall into the category of automatically-generated content which is something we’ve had in the Webmaster Guidelines since almost the beginning.

My suspicion is maybe the quality of content is a little bit better than the really old school tools, but for us it’s still automatically-generated content, and that means for us it’s still against the Webmaster Guidelines. So we would consider that to be spam.

Southern’s report points out that this has pretty much always been the case. For better or worse, Google’s leaning towards respecting the work of human writers. And that means keeping bot-generated content to a minimum. But why?

Let’s play devil’s advocate for a moment. Who do Google and John Mueller think they are? If I’m a publisher, shouldn’t I have the right to use whatever means I want to generate content?

The answer is yes, with a cup of tea on the side. The market can certainly sort out whether they want opinionated news from human experts or… whatever an AI can hallucinate.

But that doesn’t mean Google has to put up with it. Nor should it. No corporation with shareholders in their right minds would allow AI-generated content to represent their “news” section.

There’s simply no way to verify the efficacy of an AI-generated report unless you have capable journalists fact-checking everything the AI asserts.

And that, dear readers, is a bigger waste of time and money than just letting humans and machines work in tandem from the inception of a piece of content.

Most human journalists use a number of technological tools to do our jobs. We use spell and grammar checkers to try and root out typos before we turn our copy in. And the software we use to format and publish our work usually has about a dozen plug-ins manipulating SEO, tags and other digital markings to help us reach the right audience.

But, ultimately, due diligence comes down to human responsibility. And until an AI can actually be held accountable for its mistakes, Google’s doing everyone a favor by marking anything the machines have to say as spam.

There are, of course, numerous caveats. Google does allow many publishers to use AI-generated summaries of news articles or to use AI aggregators to push posts.

Essentially, big G just wants to make sure there aren’t bad actors out there generating fake news articles to game SEO for advertising hits.

You don’t have to love the mainstream media to know that fake news is bad for humanity.

You can watch the whole Hangout below:

Get the TNW newsletter

Get the most important tech news in your inbox each week.