How will we interact with a growing stream of personalized, curated content over the next generation?

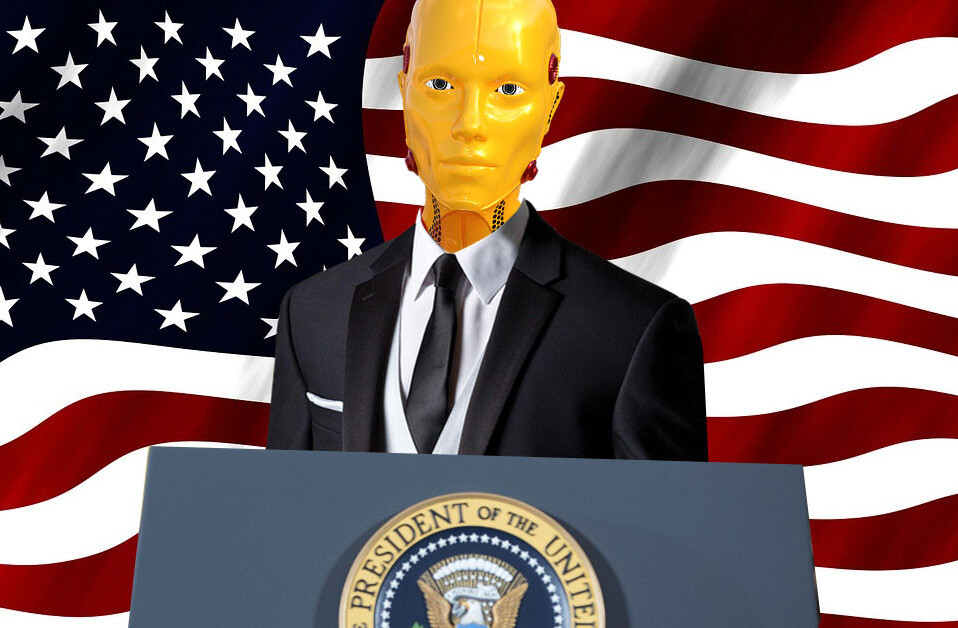

Today, intelligent systems are making judgments, continuously analyzing data, and refining their judgments, and the sophistication of those systems will no doubt continue to grow for the foreseeable future.

A primary motivation today is commercial, primarily to predict and influence a consumer’s behavior. It is not hard to imagine how this capability might be extended to non-commercial realms as well.

In any case, the intelligent systems we currently call “AI” are going to be making increasingly important decisions for us and about us over the next generation.

These capabilities will evolve alongside what we currently call the Internet of Things — a web of sensors and other devices collecting data about individuals and their physical environment.

This sensor-laden space, extending from one’s body to the buildings on the street, will inevitably stoke developments in intelligent systems and data analytics, if only because the increasing volumes of data collected will require more and more analysis.

The emergence of 5G networks will enable rich media anywhere without latency, which opens up a constellation of possibilities in terms of allowing real-time interaction with 3D immersive content.

As a pedestrian of the future walks down a city street, there will be enough accumulated and real-time data about that person in various databases – consumer, employment, health and medical, social media, location, and so on – that a remarkably granular and predictive profile will emerge, usable for any number of purposes.

Given a suitable infrastructure, the groundwork will have been laid for personalized sensory enhancements of the environment as well as an endless stream of immediately relevant content, much of it locked to physical locations.

But there is a piece missing: How do we see this new, intelligent environment? How do we interact with it in useful ways?

The phone

Today we have the smartphone. It has served as a ubiquitous and powerful connected platform that some innovators have exploited to create the first wave of consumer-focused augmented reality (AR) apps.

The smartphone has many elements a wireless AR system needs, but its display and user interface are both limited by the phone’s form factor. It’s amazing what we do on such a diminutive device. Nonetheless, the smartphone that enabled consumer AR now limits its progress.

AR on a handheld device has three fundamental limitations: first, discovery. The user somehow has to know what in the physical environment has been enhanced with digital content. In that case, AR is not a full-time way of seeing the world, but rather a special occasion.

This part-time approach, while useful for a lot of vertical applications, doesn’t open the door to a transformative, general-purpose, full-time platform.

The second limitation is that the user’s hands are occupied with the device, which limits interaction with the content. And third, looking through a frame, whether 3” or 13”, is not the same as full-field-of-view, high-resolution imagery that appears to be part of the physical space. The handheld device doesn’t provide immersion.

For this IoT-enabled, AI-curated world to be fully expressed to the senses will require a new device – maybe digital eyewear, maybe more futuristic technologies – to render 3D input derived from the sea of content available in meaningful ways. Perhaps it will be an extension of the smartphone.

However the functionality is delivered as a product, full-time, wide field-of-view AR could instigate a mass-market embrace of extended reality (XR).

The benefits of this kind of smart environment, visualized through full-time AR, are potentially enormous, commercially and societally, and ultimately unique for each person.

There could be multiple apps running at once in a user’s field of view—location-specific information, locked to buildings and other physical structures; a lot of subtle guidance in navigation and commercial choices, including food; an ongoing record of vital signs, interpreted against one’s medical history, with automatic emergency intervention if necessary; a new kind of immersive social media that is inhabited rather than looked at on a device or computer screen, a new amalgam of digital relationships, realistic avatars, and the physical world; and on and on.

Under the right circumstances, such a mass-market AR platform could supercharge other related technologies. What could emerge is a quasi-physical, quasi-digital world in which millions of people spend all day working, socializing, shopping, and learning, treating their AR interface to the world much like prescription eyewear.

Effects on users and society

But a threshold is crossed when the visual illusion becomes indistinguishable from the physical world in which it is placed, and when the illusion is maintained for hours on end.

As this becomes the environment in which one lives, it in effect becomes that person’s reality. At a certain point, opting out becomes impossible unless one wants to forego modernity, and eventually even basic social functions become difficult or impossible without some level of technology accommodation.

Within ten years, the smartphone has gone from a mobile phone with a touchscreen to an essential wearable computer for the modern business and social worlds, and it is reasonable to assume that such a compelling immersive environment would follow a similar adoption trajectory.

Living in a full-time, compellingly realistic semi-illusion curated by intelligent systems interpreting an ocean of data opens up ethical, social, and policy questions that vertical industrial applications of AR do not.

The long-term inability to opt-out of widely adopted technologies only exaggerates the large-scale impact.

So, these discussions about the non-technical effects of the convergence of some of today’s most promising technologies should be happening now, before the foundation is poured, so to speak.

A part of this discussion has already begun, with recent debates around the issues of privacy, identity, agency, and manipulation of public opinion through social media.

Addressing and resolving the concerns about today’s comparatively tame web sites could position us to tackle the much bigger versions of the same challenges we will encounter in tomorrow’s sentient environment, curated by intelligent systems and visualized by XR.

This story is republished from TechTalks, the blog that explores how technology is solving problems… and creating new ones. Like them on Facebook here and follow them down here:

Get the TNW newsletter

Get the most important tech news in your inbox each week.