There’s no positive use case for developing an app with the sole purpose of using AI to predict what a clothed woman, in an image, would look like nude – full stop. Developing such an app, as did the person(s) responsible for Deepnudes, is an act of hate and an attack on women.

At best, those who would perpetrate such an action are willfully ignorant misogynists uninterested in understanding the potential impact of their actions. At worst: they are agents of evil who take joy in the knowledge that their work will, almost surely, result in violence against women.

Trigger warning: this article contains references to sexual violence, if you’re sensitive to those topics please read with caution.

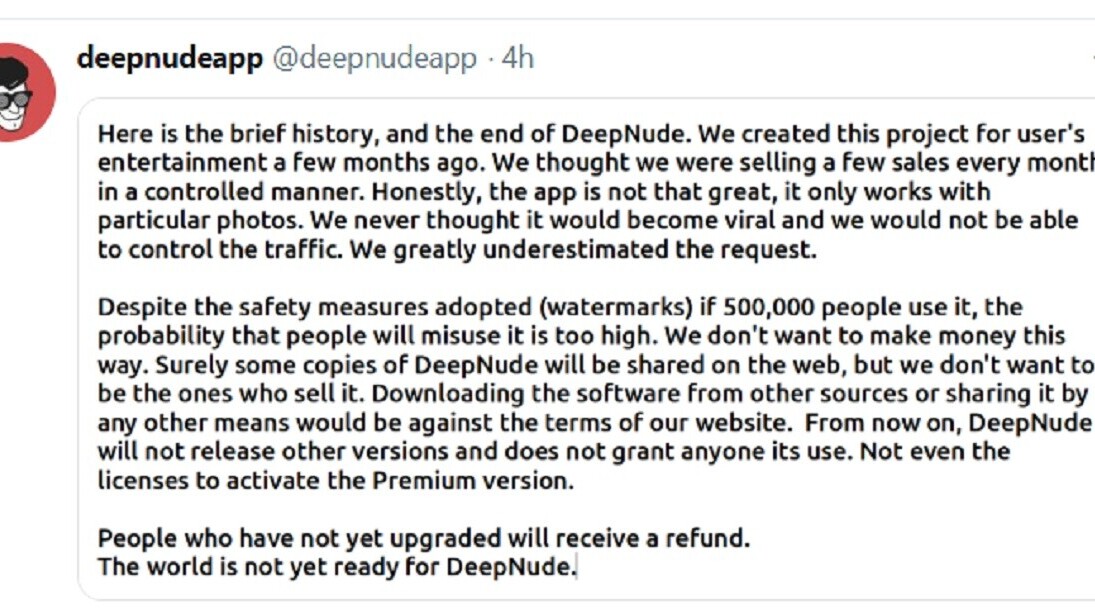

The Deepnudes app recently made headlines after Motherboard ran a story exposing it. In the wake of the article, despite having been selling the app online for months, its developer(s) today shut it down with the following announcement:

— deepnudeapp (@deepnudeapp) June 27, 2019

As the announcement points out, killing the app doesn’t stop Deepnudes from propagating. Its developer(s) intentionally made it an offline program. It will live on and likely receive further development from other bad actors who come across it. No, thanks to the person(s) who created it, Deepnudes is here to stay.

Maybe that doesn’t sound so bad to you. Perhaps you’ve taken a whimsical view akin to the one the developer(s) displays in their Twitter profile: “The super power you always wanted,” it reads. Can any harm come from someone snapping a quick pic of a woman and then tapping a button to see what she’d look like naked? Yes. Absolutely.

We live in a world where defense lawyers consistently defend rapists by claiming their female victims were dressed salaciously and presented themselves in a manner that made it too difficult for the perpetrator to control their impulses. Deepnudes and all the other disgusting, horrifying AI models like it can only exacerbate this problem. What are the odds that nobody will ever think a Deepnude of their significant other on someone else’s device is real and snap? It would be a never-ending exercise to come up with all the ways people can “misuse” this product — but “misuse” implies there’s an ethical use for it, and there is not.

The developer(s) seem to believe they’re absolved of societal responsibility because they think someone would have eventually developed it anyway. This is a stupid and offensive excuse for developing a product. Ethical developers don’t create tools that cannot be used for good, then sell and profit off of them just because they think someone else might beat them to it.

The problem here isn’t with artificial intelligence. AI is not exploiting and dehumanizing women, humans are. There is no inevitable scenario wherein Deepnudes organically emerges as a side-effect of useful deep learning research. No decent human would develop this particular app, there’s no positive use case for it outside of a research facility wherein ethical scientists develop solutions to detect and combat it.

Unfortunately detecting and countering the images doesn’t help those exploited by them. Deepnude’s victims already know the images are fake; it’s the social, mental, and potentially physical harm done by them that matters. AI experts and scientists can’t save us from that.

Our only hope — and currently the only viable solution – is to vehemently reject not only this app and all similarly degrading, dehumanizing, exploitative software, but to also declare their development and use universally reprehensible and incompatible with decent society.

Get the TNW newsletter

Get the most important tech news in your inbox each week.