Emotion recognition AI is bunk.

Don’t get me wrong, AI that recognizes human sentiment and emotion can be very useful. For example, it can help identify when drivers are falling asleep behind the wheel. But what it cannot do, is discern how a human being is actually feeling by the expression on their face.

You don’t have to take my word for it, you can try it yourself here.

Dovetail Labs, a scientific research and consultancy company, recently created a website that explains how modern “emotion recognition” systems built on deep learning work.

Typically when companies do stuff like this, the point is to show off their products so you’ll want to purchase something from them. But here, Dovetail Labs is demonstrating how awful emotion recognition is at recognizing human emotion.

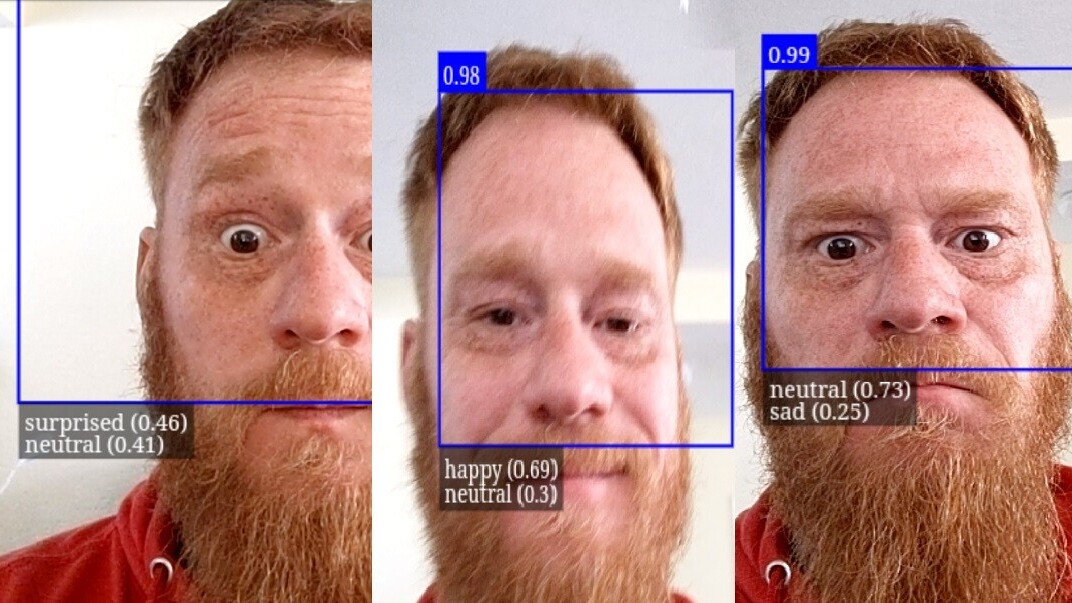

If you’re a bit reticent to enable your webcam for access (or just don’t feel like trying it out on yourself), take a gander at the featured image for this article above. I assure you, the picture on the right is not my “sad” face, no matter what the AI says.

And that’s bothersome because, as far as AI is concerned, I have a pretty easy to read face. But, as Dovetail Labs explains in the above video, AI doesn’t actually read our face.

Instead of understanding the vast spectrum of human emotion and expression, it basically reduces whatever it perceives we’re doing with our face to the AI equivalent of an emoji symbol.

And, despite it being incredibly basic, it still suffers from the same biases as all facial recognition AI: emotion recognition systems are racist.

Per the video:

A recent study has shown that these systems read the faces of Black men as more angry than the faces of white men, no matter what their expression.

This is a big deal for everyone. Companies across the world use emotion recognition systems for hiring, law enforcement agencies use them to profile potential threats, and they’re even being developed for medical purposes.

Unless emotion recognition works the same across demographics, their use is harmful – even in so-called “human in the loop” scenarios.

[Further reading: Why using AI to screen job applicants is almost always a bunch of crap]

Get the TNW newsletter

Get the most important tech news in your inbox each week.